Introduction

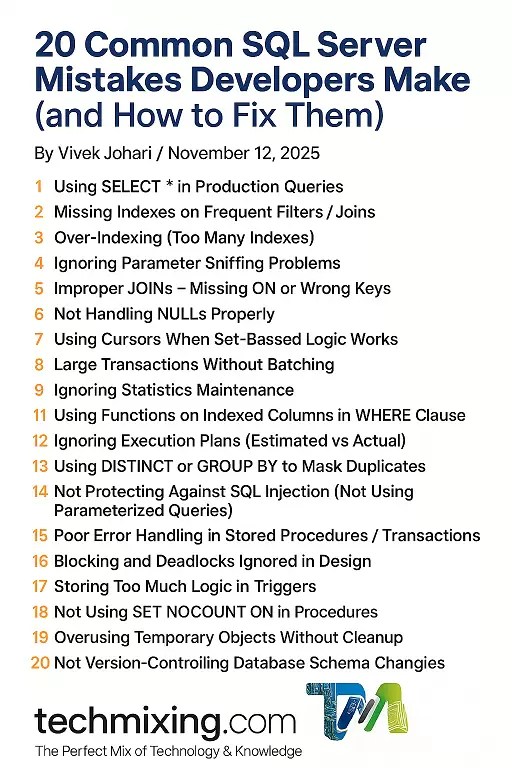

Even the best developers and DBAs make mistakes when writing SQL – it happens to everyone. Some of these slip-ups might just slow things down a little, while others can turn into full-blown performance or maintenance headaches once they hit production.

Avoiding these mistakes doesn’t just make your queries faster – it also improves your app’s reliability, boosts security, and makes long-term maintenance so much smoother.

In this article, based on my 2 decades of SQL experience, I’ve rounded up 20 of the most common SQL Server mistakes developers make – along with what they break, why they happen, and exactly how to fix or avoid them. This list is also part of my checklist for the code reviews.

Bookmark this one – it’s a great checklist for code reviews, developer onboarding, or post-mortem tuning sessions.

1. Using SELECT * in Production Queries

Problem: This statement fetches all columns, including large text/BLOB data and columns not needed by the application. This bloats network traffic, uses excessive memory, and makes the query fragile to table changes.

Fix: We should always list required columns explicitly. It reduces I/O and allows narrow covering indexes.

-- Bad

SELECT * FROM Orders;

-- Good

SELECT OrderID, OrderDate, TotalAmount FROM Orders;

2. Missing Indexes on Frequent Filters / Joins

Problem: Missing Indexes on the frequent filters/ joins result in Full table scans, high logical reads. It led to slow execution of queries.

Fix: Add appropriate clustered/ nonclustered indexes on columns used in WHERE, JOIN, and ORDER BY. Use DMVs or Query Store to discover candidates columns on which these indexes can be created.

CREATE INDEX IX_Orders_CustomerID ON Orders(CustomerID);

3. Over-Indexing (Too Many Indexes)

Problem: Creating too many indexes on a table result in heavy write penalty for INSERT/UPDATE/DELETE. It also increased storage and maintenance.

Fix: Balance read vs write needs. Consolidate overlapping indexes and drop unused ones via sys.dm_db_index_usage_stats.

4. Ignoring Parameter Sniffing Problems

Problem: Cached plan optimized for one parameter performs poorly for others.

Fixes: OPTION (RECOMPILE) for sensitive statements, OPTIMIZE FOR UNKNOWN, or use plan guides and parameterization strategies.

5. Improper JOINs – Missing ON or Wrong Keys

Problem: Improper Joins with missing ON clause or wrong keys used with the ON clause can generates Cartesian products or duplicate rows which led to incorrect results.

Fix: Always write explicit JOIN ... ON with correct key columns and verify relationships (FKs).

-- Risky (implicit join)

SELECT * FROM A, B WHERE A.x = B.y;

-- Clear (explicit join)

SELECT * FROM A JOIN B ON A.x = B.y;

6. Not Handling NULLs Properly

Problem: Unexpected results because NULL != NULL.

Fix: Use IS NULL / IS NOT NULL, COALESCE() for comparisons, and be explicit about nullable columns in filters.

7. Using Cursors When Set-Based Logic Works

Problem: While working with large dataset, Cursors are slow and resource-heavy.

Fix: Use Set based approach instead of Cursor by converting row-by-row processing to set-based operations (joins, window functions, MERGE, UPDATE with joins).

8. Large Transactions Without Batching

Problem: Using Large Transactions results in long-held locks, bloated transaction log, blocking, and potential timeouts.

Fix: Batch large INSERT/UPDATE operations (e.g., process 10k rows per loop) and commit often. If cursors are required, use FAST_FORWARD or READ_ONLY cursors

WHILE (1=1)

BEGIN

WITH cte AS (

SELECT TOP (10000) * FROM Staging WHERE Processed = 0

)

UPDATE t SET Processed = 1

FROM cte c JOIN Staging t ON t.Id = c.Id;

IF @@ROWCOUNT = 0 BREAK;

END

9. Ignoring Statistics Maintenance

Problem: Outdated statistics results in bad cardinality estimates which let to poor execution cache plans.

Fix: Regularly update stats to help the optimizer choose better plans. Schedule sp_updatestats, or targeted UPDATE STATISTICS, and monitor auto-update behavior.

10. Implicit Data Type Conversions

Problem: Implicit conversions prevent index seeks and cause scans.

Fix: Ensure data types match between columns and parameters/literals; cast explicitly only when necessary.

-- If CustomerID is INT:

WHERE CustomerID = '123'; -- forces conversion

WHERE CustomerID = 123; -- best

11. Using Functions on Indexed Columns in WHERE Clause

Problem: Using a function on the where clause column prevents SQL server to use index on that column which result in poor performance. For example, Functions (e.g., YEAR(date) = 2024) disable index seeks and cause scans.

Fix: Rewrite filters to be SARGable (search-arg-able), e.g. date >= '2024-01-01' AND date < '2025-01-01'.

12. Ignoring Execution Plans (Estimated vs Actual)

Problem: Treating SQL like a black box. A query might run fast on a development machine with small data, but fail catastrophically in production.

Fix: Always Analyze the Execution Plan. Use SQL Server Management Studio (SSMS) to view the Actual Execution Plan for your critical queries to find high-cost operators like Table Scans, Sorts, and Key Lookups..

13. Using DISTINCT or GROUP BY to Mask Duplicates

Problem: Sometimes we hides data model or join problems by using Distinct or Group By clause in the end to hide/mask the duplicate records. It adds extra cost.

Fix: Fix the root cause (join conditions or grouping) instead of applying DISTINCT as a bandaid/ temporary fix.

14. Not Protecting Against SQL Injection (Not Using Parameterized Queries)

Problem: Security vulnerabilities when building SQL with concatenation. Concatenating user input directly into an SQL string (e.g., cmd = "SELECT * FROM Users WHERE ID = '" + UserID + "'"). This is the primary cause of SQL Injection attacks.

Fix: Validate inputs. Use Parameters or Stored Procedures. Always use parameterized queries (e.g., SqlParameter in C#, or prepared statements) to separate the SQL command from the user data.

-- Parameterized use from app code; avoid building SQL with string concatenation.

15. Poor Error Handling in Stored Procedures / Transactions

Problem: Uncommitted transactions, hidden errors, or partial updates. If exception handling is not done properly, query execution may break in midway and wrong data is inserted into the tables which will corrupt the database.

Fix: Proper error handling using Try….Catch should be done to manage log and errors. Catch block should give proper Error message.

In case of any errors, execute Rollback transactions within the CATCH block. This prevents tables data from getting corrupted due to uncommitted data. It helps in maintaining the database integrity.

BEGIN TRY

BEGIN TRAN;

-- operations

COMMIT TRAN;

END TRY

BEGIN CATCH

IF XACT_STATE() <> 0 ROLLBACK TRAN;

THROW;

END CATCH;

16. Blocking and Deadlocks Ignored in Design

Problem: Application contention and degraded throughput.

Fix: Keep transactions short, use appropriate isolation levels (READ COMMITTED SNAPSHOT where helpful), add appropriate indexes to avoid blocking scans, and implement deadlock retry logic at app level.

17. Storing Too Much Logic in Triggers

Problem: Triggers can hide expensive operations, lead to recursion, and make behavior implicit.

Fix: Use triggers sparingly; prefer stored procedures or application-layer logic for complex flows. Keep triggers minimal and well-documented.

18. Not Using SET NOCOUNT ON in Procedures

Problem: Extra DONE_IN_PROC messages increase network chatter for bulk operations.

Fix: We should use “set not count on” at the top of stored procedures/ code block. Then use “set not count off” at the end of the stored procedures/ code block. This is necessary unless you need to know how many rows the query or stored procedure affects. This will reduce unnecessary overhead.

19. Overusing Temporary Objects Without Cleanup

Problem: Excessive temp tables or table variables cause TempDB pressure and spills.

Fix: Prefer table variables for small datasets, temp tables for larger sets (and clean them up). Monitor TempDB usage and configure multiple data files when needed.

20. Not Version-Controlling Database Schema Changes

Problem: Untracked schema drift, risky production changes, and deployment nightmares.

Fix: Use source control (Git) for DDL scripts and a DB migration tool (Flyway, Liquibase, SSDT, EF Migrations). Automate deployments via CI/CD pipelines.

Reference Table for Summary

| # | Mistake | Fix |

|---|---|---|

| 1 | SELECT * | List explicit columns |

| 2 | Missing indexes | Add targeted indexes |

| 3 | Too many indexes | Consolidate & drop unused |

| 4 | Parameter sniffing | RECOMPILE / OPTIMIZE FOR |

| 5 | Bad JOINs | Explicit JOIN..ON with keys |

| 6 | NULL mishandling | Use IS NULL / COALESCE |

| 7 | Cursors | Use set-based SQL |

| 8 | Large transactions | Batch operations |

| 9 | Old stats | Update statistics regularly |

| 10 | Implicit conversions | Match data types |

| 11 | Non-SARGable filters | Make filters SARGable |

| 12 | Ignore plans | Review actual plans |

| 13 | Mask duplicates | Fix join logic |

| 14 | SQL injection | Parameterize queries |

| 15 | Poor error handling | TRY/CATCH + XACT_STATE |

| 16 | Blocking/deadlocks | Short transactions + retry |

| 17 | Heavy triggers | Move logic to SPs/app |

| 18 | Missing NOCOUNT | SET NOCOUNT ON |

| 19 | TempDB misuse | Clean up & monitor TempDB |

| 20 | No schema control | Use migrations + CI/CD |

Best Practices Checklist (for code reviews)

- No

SELECT *in production queries - Queries return only needed columns and rows

- Execution plans checked for top offenders

- Proper indexes exist and are maintained

- Statistics up-to-date for large tables

- Parameter types match column types

- Transactions are short and batched when needed

- No raw SQL concatenation from user input

- Error handling and retries implemented

- Schema changes version-controlled

Final Thoughts

Many SQL problems are avoidable with discipline, tooling, and a culture of review. Start with measurable data (Query Store, DMVs), enforce standards in code reviews, and automate maintenance (index rebuilds, stats updates). Small fixes like removing SELECT * or adding a well-placed index, often yield the largest gains.

Read more articles on Performance tuning, click here

Read more articles on SQL Server, click here

Enjoyed this post? Support the blog by liking, sharing, and subscribing for more articles on Data, AI & Cloud.

Discover more from Technology with Vivek Johari

Subscribe to get the latest posts sent to your email.